In my old job at Telogis, we had the fun task of adding up vehicle fuel, and distance, to calculate things like fuel efficiency.

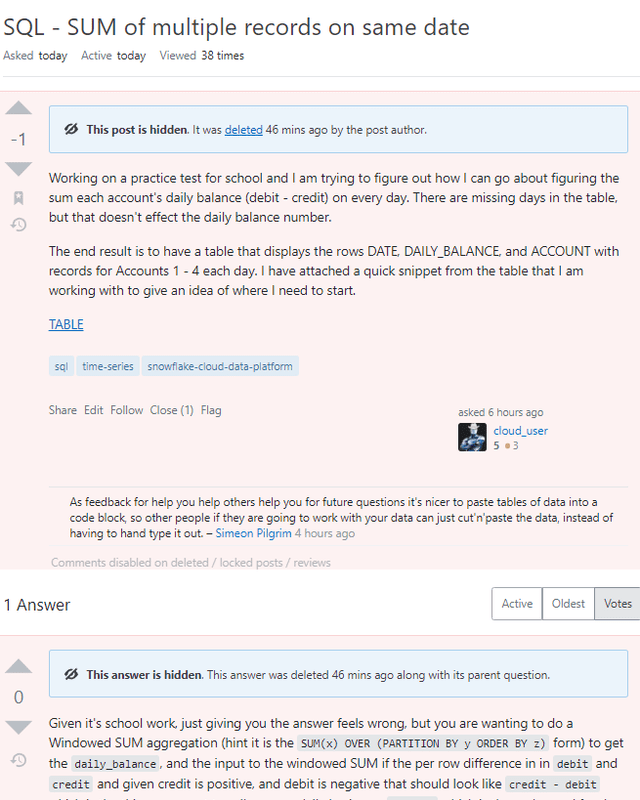

So we might have some data that looks like:

with data(vehicle_id, time_ts, dist_delta_km, fuel_delta_lt ) as (

select

$1,

try_to_timestamp_ntz($2, 'yyyy-mm-dd hh:mi:ss'),

try_to_number($3, 10, 1),

try_to_number($4, 10, 1)

from values

(1, '2023-10-30 10:00:00', '10.0', '0.5'),

(1, '2023-10-30 11:00:00', '5.0', '0.3')

)

and from that we would break it into days, and sum it up, like this sort-of:

select vehicle_id

,time_ts::date as day

,sum(dist_delta_km) as dist_km

,sum(fuel_delta_lt) as fuel_lt

,round(dist_km / fuel_lt, 2) as mpg_wink

from data

group by 1,2

order by 1,2;

| VEHICLE_ID |

DAY |

DIST_KM |

FUEL_LT |

MPG_WINK* |

| 1 |

2023-10-30 |

15 |

0.8 |

18.75 |

*Yes, that is not mpg, but I do not like the lt/km metric, and mpg is just the word I will use.

And sure enough, for a large fleet looking at a big picture of a week, this makes sense.

But if your data is unaligned, aka you get distance on most data rows, but only get fuel intermittently, over time you can get things like.

(1, '2023-10-29 20:00:00', '10.0', null),

(1, '2023-10-30 00:01:00', null, '5.0'),

(1, '2023-10-30 00:20:00', '25.0', null),

(1, '2023-10-30 00:21:00', null, '1.0')

So this data we have a 8pm distance, just after midnight we get a fuel reading, later some more distance and a smaller fuel change. Now this is a little odd, as the data is shown in delta (change) form

where the gold standard of data in my opinion is absolute (aka the odometer style) as if data is missing the change is still calculable. With this form there is an implicit “last fuel reading” that the after midnight value is “since”.

But all those nit-picking details aside, the core point here is that, “the fuel mostly belongs to the 29th not the 30th”. So how might we share the love?

We linearize it!

So if we mash a zero fuel reading into the delta data, to give us a “when is this data since”, like so:

(1, '2023-10-29 19:59:00', null, '0.0'),

(1, '2023-10-29 20:00:00', '10.0', null),

(1, '2023-10-30 00:01:00', null, '5.0'),

(1, '2023-10-30 00:20:00', '25.0', null),

(1, '2023-10-30 00:21:00', null, '1.0')

We currently get a division by zero error.. sigh, so lets make that safe with DIV0

,round(div0(dist_km, fuel_lt), 2) as mpg_wink

| VEHICLE_ID |

DAY |

DIST_KM |

FUEL_LT |

MPG_WINK |

| 1 |

2023-10-29 |

10 |

0 |

0 |

| 1 |

2023-10-30 |

25 |

6 |

4.17 |

So we can find the prior time of the fuel reading, and then slice that over days I will limit to 4 as here.

So here we are going to use NVL2 to conditionally do stuff if the current row (and the previous row’s fuel) are not null:

select

vehicle_id

,time_ts

,fuel_delta_lt

,nvl2(fuel_delta_lt,

lag(fuel_delta_lt) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_delta

,nvl2(fuel_delta_lt,

lag(nvl2(fuel_delta_lt, time_ts, null)) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_time

from data

order by 1,2;

for the above data we get:

| VEHICLE_ID |

TIME_TS |

FUEL_DELTA_LT |

PRIOR_FUEL_DELTA |

PRIOR_FUEL_TIME |

| 1 |

2023-10-29 19:59:00.000 |

0 |

null |

null |

| 1 |

2023-10-29 20:00:00.000 |

null |

null |

null |

| 1 |

2023-10-30 00:01:00.000 |

5 |

0 |

2023-10-29 19:59:00.000 |

| 1 |

2023-10-30 00:20:00.000 |

null |

null |

null |

| 1 |

2023-10-30 00:21:00.000 |

1 |

5 |

2023-10-30 00:01:00.000 |

so we can see that for rows that “have fuel” we find the prior rows values of the fuel, and of the time for that same row. This also could be done with a OBJECT_CONSTRUCT, and then one LAG on that, and then a pull apart, and for more values, that can be more performant, as always test, and re-test later.

So we now have the prior time, so we can now slice that to days, and given we are going to deal with “overnight” splits, we might as well fully chunk this data into N-days chunks.

with data(vehicle_id, time_ts, dist_delta_km, fuel_delta_lt ) as (

select

$1,

try_to_timestamp_ntz($2, 'yyyy-mm-dd hh:mi:ss'),

try_to_number($3, 10, 1),

try_to_number($4, 10, 1)

from values

(1, '2023-10-29 19:59:00', null, '0.0'),

(1, '2023-10-29 20:00:00', '10.0', null),

(1, '2023-10-30 00:01:00', null, '5.0'),

(1, '2023-10-30 00:20:00', '25.0', null),

(1, '2023-10-30 00:21:00', null, '1.0')

), prior_fuel as (

select

vehicle_id

,time_ts

,fuel_delta_lt

,nvl2(fuel_delta_lt,

lag(fuel_delta_lt) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_delta

,nvl2(fuel_delta_lt,

lag(nvl2(fuel_delta_lt, time_ts, null)) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_time

from data

), max_fuel_range(rn) as (

select * from values (0),(1),(2),(3)

)

select pf.*

,fr.*

,dateadd('day', fr.rn, date_trunc('day', pf.prior_fuel_time)) as p_days

from prior_fuel as pf

left join max_fuel_range as fr

on prior_fuel_time is not null

qualify

(pf.prior_fuel_time is null OR

p_days < pf.time_ts)

order by 1,2;

So I am not loving that OR but for hand waving “something like this” purposes, we now have our data with days splits available:

| VEHICLE_ID |

TIME_TS |

FUEL_DELTA_LT |

PRIOR_FUEL_DELTA |

PRIOR_FUEL_TIME |

RN |

P_DAYS |

| 1 |

2023-10-29 19:59:00.000 |

0 |

null |

null |

null |

null |

| 1 |

2023-10-29 20:00:00.000 |

null |

null |

null |

null |

null |

| 1 |

2023-10-30 00:01:00.000 |

5 |

0 |

2023-10-29 19:59:00.000 |

0 |

2023-10-29 00:00:00.000 |

| 1 |

2023-10-30 00:01:00.000 |

5 |

0 |

2023-10-29 19:59:00.000 |

1 |

2023-10-30 00:00:00.000 |

| 1 |

2023-10-30 00:20:00.000 |

null |

null |

null |

null |

null |

| 1 |

2023-10-30 00:21:00.000 |

1 |

5 |

2023-10-30 00:01:00.000 |

0 |

2023-10-30 00:00:00.000 |

So now we can LEAST/GREATEST the times to correctly time the span, and thus get the span duration, and the record duration, and thus linearize that fuel:

with data(vehicle_id, time_ts, dist_delta_km, fuel_delta_lt ) as (

select

$1,

try_to_timestamp_ntz($2, 'yyyy-mm-dd hh:mi:ss'),

try_to_number($3, 10, 1),

try_to_number($4, 10, 1)

from values

(1, '2023-10-29 19:59:00', null, '0.0'),

(1, '2023-10-29 20:00:00', '10.0', null),

(1, '2023-10-30 00:01:00', null, '5.0'),

(1, '2023-10-30 00:20:00', '25.0', null),

(1, '2023-10-30 00:21:00', null, '1.0'),

(2, '2023-10-28 23:00:00', null, '0.0'),

(2, '2023-10-31 4:00:00', null, '53.0')

), prior_fuel as (

select

vehicle_id

,time_ts

,fuel_delta_lt

,nvl2(fuel_delta_lt,

lag(nvl2(fuel_delta_lt, time_ts, null)) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_time

from data

), max_fuel_range(rn) as (

select * from values (0),(1),(2),(3)

)

select pf.*

,fr.*

,dateadd('day', fr.rn, date_trunc('day', pf.prior_fuel_time)) as p_days

,greatest(pf.prior_fuel_time, p_days) as span_start

,least(dateadd('day',1,p_days), pf.time_ts) as span_end

,(date_part('epoch_second', span_end) - date_part('epoch_second', span_start))/3600 as span_len

,(date_part('epoch_second', pf.time_ts) - date_part('epoch_second', pf.prior_fuel_time))/3600 as record_len

,nvl2(pf.fuel_delta_lt, round(div0(fuel_delta_lt, record_len) * span_len, 3), null ) as linearize_fuel

from prior_fuel as pf

left join max_fuel_range as fr

on prior_fuel_time is not null

qualify

(pf.prior_fuel_time is null OR

p_days < pf.time_ts)

order by 1,2;

| VEHICLE_ID |

TIME_TS |

FUEL_DELTA_LT |

PRIOR_FUEL_TIME |

RN |

P_DAYS |

SPAN_START |

SPAN_END |

SPAN_LEN |

RECORD_LEN |

LINEARIZE_FUEL |

| 1 |

2023-10-29 19:59:00.000 |

0 |

|

|

|

|

|

|

|

|

| 1 |

2023-10-29 20:00:00.000 |

|

|

|

|

|

|

|

|

|

| 1 |

2023-10-30 00:01:00.000 |

5 |

2023-10-29 19:59:00.000 |

0 |

2023-10-29 00:00:00.000 |

2023-10-29 19:59:00.000 |

2023-10-30 00:00:00.000 |

4.016667 |

4.033333 |

4.979 |

| 1 |

2023-10-30 00:01:00.000 |

5 |

2023-10-29 19:59:00.000 |

1 |

2023-10-30 00:00:00.000 |

2023-10-30 00:00:00.000 |

2023-10-30 00:01:00.000 |

0.016667 |

4.033333 |

0.021 |

| 1 |

2023-10-30 00:20:00.000 |

|

|

|

|

|

|

|

|

|

| 1 |

2023-10-30 00:21:00.000 |

1 |

2023-10-30 00:01:00.000 |

0 |

2023-10-30 00:00:00.000 |

2023-10-30 00:01:00.000 |

2023-10-30 00:21:00.000 |

0.333333 |

0.333333 |

1 |

| 2 |

2023-10-28 23:00:00.000 |

0 |

|

|

|

|

|

|

|

|

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-28 23:00:00.000 |

0 |

2023-10-28 00:00:00.000 |

2023-10-28 23:00:00.000 |

2023-10-29 00:00:00.000 |

1 |

53 |

1 |

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-28 23:00:00.000 |

1 |

2023-10-29 00:00:00.000 |

2023-10-29 00:00:00.000 |

2023-10-30 00:00:00.000 |

24 |

53 |

24 |

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-28 23:00:00.000 |

2 |

2023-10-30 00:00:00.000 |

2023-10-30 00:00:00.000 |

2023-10-31 00:00:00.000 |

24 |

53 |

24 |

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-28 23:00:00.000 |

3 |

2023-10-31 00:00:00.000 |

2023-10-31 00:00:00.000 |

2023-10-31 04:00:00.000 |

4 |

53 |

4 |

yippie!

Which should be jiggled around a little more, and that linearization should have the multiplication prior to the division for numerical stability.

so should look more like:

with data(vehicle_id, time_ts, dist_delta_km, fuel_delta_lt ) as (

select

$1,

try_to_timestamp_ntz($2, 'yyyy-mm-dd hh:mi:ss'),

try_to_number($3, 10, 1),

try_to_number($4, 10, 1)

from values

(1, '2023-10-29 19:59:00', null, '0.0'),

(1, '2023-10-29 20:00:00', '10.0', null),

(1, '2023-10-30 00:01:00', null, '5.0'),

(1, '2023-10-30 00:20:00', '25.0', null),

(1, '2023-10-30 00:21:00', null, '1.0'),

(2, '2023-10-28 23:00:00', null, '0.0'),

(2, '2023-10-31 4:00:00', null, '53.0')

), prior_fuel as (

select

vehicle_id

,time_ts

,fuel_delta_lt

,nvl2(fuel_delta_lt,

lag(nvl2(fuel_delta_lt, time_ts, null)) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_time

from data

), max_fuel_range(rn) as (

select * from values (0),(1),(2),(3)

), fuel_spans as (

select pf.*

,fr.*

,dateadd('day', fr.rn, date_trunc('day', pf.prior_fuel_time)) as p_days

from prior_fuel as pf

left join max_fuel_range as fr

on prior_fuel_time is not null

qualify

(pf.prior_fuel_time is null OR

p_days < pf.time_ts)

)

select fs.* exclude (prior_fuel_time, rn, p_days)

,greatest(fs.prior_fuel_time, fs.p_days) as span_start

,least(dateadd('day',1,fs.p_days), fs.time_ts) as span_end

,(date_part('epoch_second', span_end) - date_part('epoch_second', span_start)) as span_len

,(date_part('epoch_second', fs.time_ts) - date_part('epoch_second', fs.prior_fuel_time)) as record_len

,nvl2(fs.fuel_delta_lt, round(div0(fuel_delta_lt * span_len, record_len),4), null ) as linearize_fuel

from fuel_spans as fs

order by 1,2, span_start;

giving:

| VEHICLE_ID |

TIME_TS |

FUEL_DELTA_LT |

SPAN_START |

SPAN_END |

SPAN_LEN |

RECORD_LEN |

LINEARIZE_FUEL |

| 1 |

2023-10-29 19:59:00.000 |

0 |

|

|

|

|

|

| 1 |

2023-10-29 20:00:00.000 |

|

|

|

|

|

|

| 1 |

2023-10-30 00:01:00.000 |

5 |

2023-10-29 19:59:00.000 |

2023-10-30 00:00:00.000 |

14,460 |

14,520 |

4.9793 |

| 1 |

2023-10-30 00:01:00.000 |

5 |

2023-10-30 00:00:00.000 |

2023-10-30 00:01:00.000 |

60 |

14,520 |

0.0207 |

| 1 |

2023-10-30 00:20:00.000 |

|

|

|

|

|

|

| 1 |

2023-10-30 00:21:00.000 |

1 |

2023-10-30 00:01:00.000 |

2023-10-30 00:21:00.000 |

1,200 |

1,200 |

1 |

| 2 |

2023-10-28 23:00:00.000 |

0 |

|

|

|

|

|

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-28 23:00:00.000 |

2023-10-29 00:00:00.000 |

3,600 |

190,800 |

1 |

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-29 00:00:00.000 |

2023-10-30 00:00:00.000 |

86,400 |

190,800 |

24 |

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-30 00:00:00.000 |

2023-10-31 00:00:00.000 |

86,400 |

190,800 |

24 |

| 2 |

2023-10-31 04:00:00.000 |

53 |

2023-10-31 00:00:00.000 |

2023-10-31 04:00:00.000 |

14,400 |

190,800 |

4 |

So here we have “one signal” fuel, stretched over time linearly, so that fuel used yesterday can be counted against “yesterday”. So bringing the original sum code back to life, and keeping the OG code, and the linear fuel:

with data(vehicle_id, time_ts, dist_delta_km, fuel_delta_lt ) as (

select

$1,

try_to_timestamp_ntz($2, 'yyyy-mm-dd hh:mi:ss'),

try_to_number($3, 10, 1),

try_to_number($4, 10, 1)

from values

(1, '2023-10-29 19:59:00', null, '0.0'),

(1, '2023-10-29 20:00:00', '10.0', null),

(1, '2023-10-30 00:01:00', null, '5.0'),

(1, '2023-10-30 00:20:00', '25.0', null),

(1, '2023-10-30 00:21:00', null, '1.0')--,

--(2, '2023-10-28 23:00:00', null, '0.0'),

--(2, '2023-10-31 4:00:00', null, '53.0')

), prior_fuel as (

select

vehicle_id

,time_ts

,dist_delta_km

,fuel_delta_lt

,nvl2(fuel_delta_lt,

lag(nvl2(fuel_delta_lt, time_ts, null)) ignore nulls over (partition by vehicle_id order by time_ts)

,null) as prior_fuel_time

from data

), max_fuel_range(rn) as (

select * from values (0),(1),(2),(3)

), fuel_spans as (

select pf.*

,fr.*

,dateadd('day', fr.rn, date_trunc('day', pf.prior_fuel_time)) as p_days

from prior_fuel as pf

left join max_fuel_range as fr

on prior_fuel_time is not null

qualify

(pf.prior_fuel_time is null OR

p_days < pf.time_ts)

), fuel_linearized as (

select fs.* exclude (prior_fuel_time, rn, p_days)

,greatest(fs.prior_fuel_time, fs.p_days) as span_start

,least(dateadd('day',1,fs.p_days), fs.time_ts) as span_end

,(date_part('epoch_second', span_end) - date_part('epoch_second', span_start)) as span_len

,(date_part('epoch_second', fs.time_ts) - date_part('epoch_second', fs.prior_fuel_time)) as record_len

,nvl2(fs.fuel_delta_lt, round(div0(fuel_delta_lt * span_len, record_len),4), null ) as linearize_fuel

from fuel_spans as fs

)

select

vehicle_id

,nvl(span_start, time_ts)::date as day

,sum(dist_delta_km) as dist_km

,sum(iff(span_end = time_ts, fuel_delta_lt, null)) as og_fuel_lt

,round(div0(dist_km, og_fuel_lt), 2) as og_mpg

,sum(linearize_fuel) as lin_fuel_lt

,round(div0(dist_km, lin_fuel_lt), 2) as lin_mpg

from fuel_linearized as fl

group by 1,2

order by 1,2;

| VEHICLE_ID |

DAY |

DIST_KM |

OG_FUEL_LT |

OG_MPG |

LIN_FUEL_LT |

LIN_MPG |

| 1 |

2023-10-29 |

10 |

null |

null |

4.9793 |

2.01 |

| 1 |

2023-10-30 |

25 |

6 |

4.17 |

1.0207 |

24.49 |

So we should linearize the distance also… which is a rinse and repeat of the above.

But the next thing to consider is “how much fuel is spent while the vehicle is off”, which is to say, if the vehicle does report “anything” while off, and is off for 1 day, so like that vehicle 2 data lets say, but we also know it was off, we do not want to treat that time equally. This is Weighted Linearization, and I will post on that next.